First thoughts on moving my blog from Jekyll & Github Pages to Micro.Blog

Spent a little time looking at how I can get my historical posts and photos migrated to Mb. If I’m going to go all in then it’s important that I can keep everything in one place. This Mb help post shows how it’s possible to upload markdown files, which is good news (since this is how my current blog’s post are stored) along with what front matter is supported in Microblog (which is Hugo behind the scenes).

Few take aways..

I’m going to lose my tags. This probably isn’t the end of the world as actually most of my tags should probably be categories anyway. Most of my posts have many tags as the original idea was to help identify any relevant posts on any number of topics. Some basic testing shows that if I try and import a post with several tags as-is then it is processed and shown in Mb as one long category (see image below), this is probably because I just use spaces rather than commas to separate tags, which works fine in Jekyll. Nothing major in the blog relies on tags, there’s a few links, particularly the country page of my blog, which directs to a page with all any posts tagged with that country, but I can restructure that and achieve the same result with Categories. So in the below example, I will remove all tags except Finland. I could keep all tags but I will end up with hundreds and hundreds of categories.

Re-tagging/categorising will help clear things up a bit too, although there’s going to be some element of manual work. Hopefully it’ll help me reduce the number of categories I use to keep things simple.

Another piece of front matter I use is ‘redirect_from’ which I setup because the slugs from my old blog don’t correspond to the Jekyll blog. I can live without these, they're not particularly important. I don’t have any logs from Github pages so I don’t know how much they’re used, but I’m guessing they’re basically not used at all anyway.

I’ll also lose two custom fields I setup, one called ‘history’ which I used to indicate the date of the activity within a post in addition to the publish date, since the two can differ quite a lot. Along with ‘location’ which I intended to indicate where the post is relevant to. Neither is linked in any way. Location I ended up using quite widely, history isn’t used anywhere at all really. The below post about my trip to Belgrade shows both in action.

Another observation is that for images to upload to Mb I need to format them to be absolute URLs, which they are not at present. This should be relatively easy, I just need to run a find and replace for '/assets/' with ‘[andrews.io/assets/'](https://andrews.io/assets/') and we’re good to go. I only use assets for images, so that’s easy.

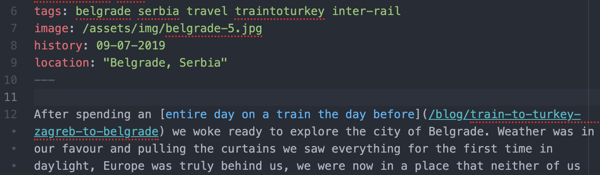

Unfortunately many of my posts have the main image as front matter rather than in the body of the post, see below example. This doesn’t see to import right, so I will need to link the first image where this is the case in the body for it to upload properly.

Another link issue is that there is a fair amount of cross-linking between posts, see the example below. I’m going to need to give this some thought as the uploaded post in Mb takes on a complete new slug format (e.g. micro.andrews.io/2018/11/13/helsinki.html). Keeping these links working isn’t the end of the world, but there is a reason many of them are there. I think I might have to just upload everything, then export a list of these links and go and manually correct them with the correct slugs in Mb. Eventually I’m going to want to move Mb from micro.andrews.io to be andrews.io and so that needs more thinking still. Ugh.

Last, I really quite like my Archive format. I would love to be able to have an archive page which lists ‘titled’ posts in a similar way in Mb. I use the Archive a lot to look back and quickly reflect on some of the things I did each year based on the title. Maybe this is possible, it’s a bit beyond my knowledge at present. Perhaps I have a category named ‘Important’ or something that then lists these.

Of course… Design is another topic completely, but one which isn’t at the top of the list. If I can get everything migrated properly I know I can change the design as and when.